In early November Sam Altman announced some new models and developers’ products, taking OpenAI one step closer to becoming a true platform. One of the new capabilities announced for GPT-4 was the ability for users to create their own chatbots and agent-like experiences with a click.

We were excited to introduce Pelles GPT, a specialized tool exclusively designed for MEP subcontractors’ estimators and engineers. After reviewing its benefits (here and here) it is now time to discuss its limitations and disadvantages or in other words — why GPT isn’t good enough for the industry and why the industry should have dedicated AI tools.

The top 5 limitations of GPT for construction

We find GPT to be less than ideal for construction professionals to use in their daily workflow because of these top 5 limitations listed below.

- GPT struggles with specialized topics and industry-specific context

Despite ChatGPT’s access to a large amount of information, it struggles to answer questions that are context-specific or require information on a specific topic. - GPT Hallucinates Facts

ChatGPT tends to lie and fabricate facts to answer a question. Open AI themselves have published a note about GPT’s tendency to provide incorrect answers. - GPT lacks common sense

ChatGPT generates human-like responses but lacks common sense. That leads to nonsensical responses to certain questions or situations. - GPT doesn’t have internet access or real-time data

The model’s knowledge cut-off date is April 2023. It doesn’t have access to anything after 04/23 or real-time data. - GPT’s answers are not explainable and users can’t work with the document

ChatGPT answers questions. It doesn’t explain the underlying assumptions or facts. It also doesn’t enable users to find the relevant sources within the document they question to double-check the answers.

Real-life examples of GPT’s limitations

To check GPT’s limitations we uploaded a bid package and asked it some questions. We used a bid package for mechanical works for the renovation of a ~4,000-square-foot restaurant in NY.

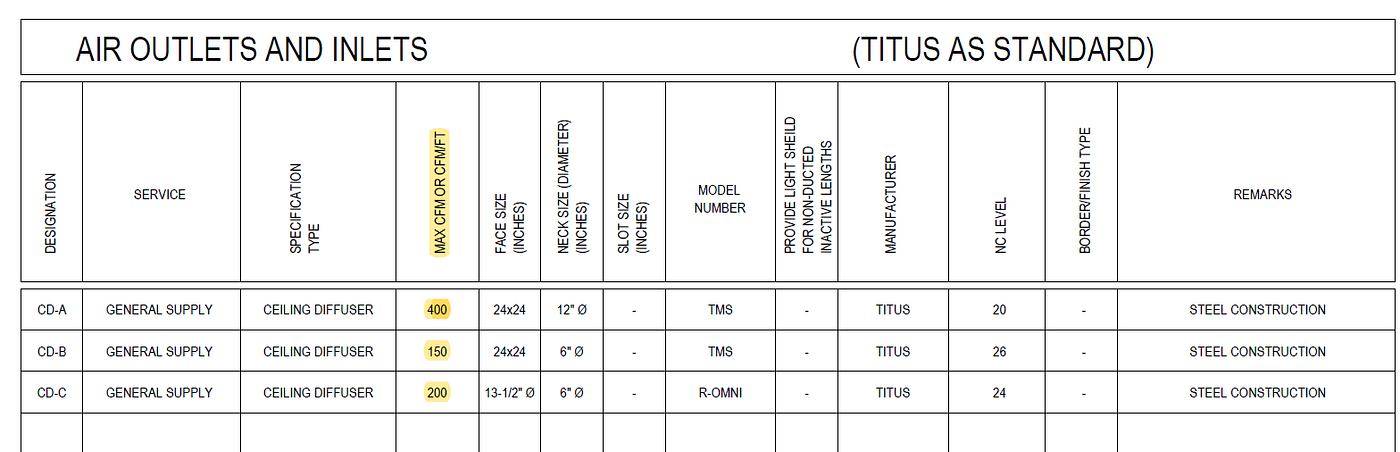

Q1: How many ceiling diffusers are on the ground floor?

At first sight, this seems like a legitimate answer. However, this answer fabricates facts and demonstrates the lack of common sense of the GPT, as even a rookie estimator knows that these numbers don’t add up for a 4,000-square-foot project.

Get Yael Meretyk Hanan’s stories in your inbox

Join Medium for free to get updates from this writer.

Subscribe

The GPT got the diffuser types right but the quantities wrong. To get the quantities right it needed industry-specific context; the number of diffusers isn’t shown on the schedule and has to be counted on the drawing (assuming the drawing is completed). It also needed high multimodal abilities to count drawings. Both of these necessities are lacking at the moment.

This is where those numbers came out of:

Additionally, this highlights the limits of working with the GPT while double-checking its sources.

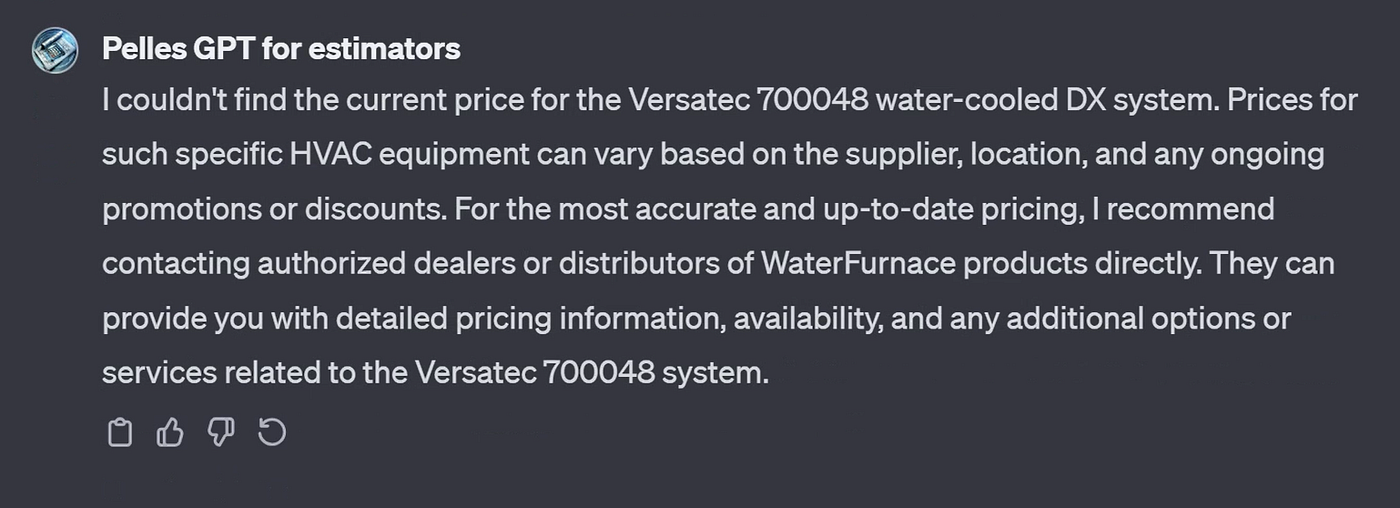

Q2: What is the current price of Versatec 700–048?

Per the schedule, this project requires water-cooled DX systems of that model. Remember GPT doesn’t have real-time data? Instead of simply stating that it generates a long answer (with no answer).

Q3: What equipment types are used in this project?

Answering this question requires context, nuance, and common sense. To get it right, GPT needs not only to identify what is considered equipment but also to understand that the objective is to identify the major systems, not each fan or diffuser.

This is again, a very partial answer. It lacks common sense and context to know that the MAU and PCU are of importance. Unit designations (AC-1, AC-2, etc)are mistakenly taken as model numbers.

While OpenAI’s GPT already delivers impressive outcomes for MEP professionals, the real test lies in tailoring AI models to provide customized, high-value solutions specific to the MEP sector.

This endeavor presents an exciting yet unmastered frontier.